The administrative burden on mental health professionals is costly, leading to burnout and taking valuable time away from patient care. Fortunately, the emergence of AI in the healthcare sector offers a possible solution. By automating many of these time-consuming manual tasks, AI is transforming the way healthcare professionals work. NovoNote by NovoPsych is our innovative AI-powered note-taking assistant that reduces administrative burden by automating clinical documentation, saving time and enhancing patient care.

However, as with any new technology, AI brings a unique set of responsibilities and pitfalls associated with its use. We understand that safeguarding yourself and your patients is of utmost importance, and we want all clinicians using NovoNote to do so responsibly.

Before you take the leap, it’s important to us that you feel comfortable and confident using this technology safely and responsibly. We are proud to lead the way in the ethical use of AI-assisted note-taking and think it’s time for an honest conversation about how to go about integrating it into your practice safely.

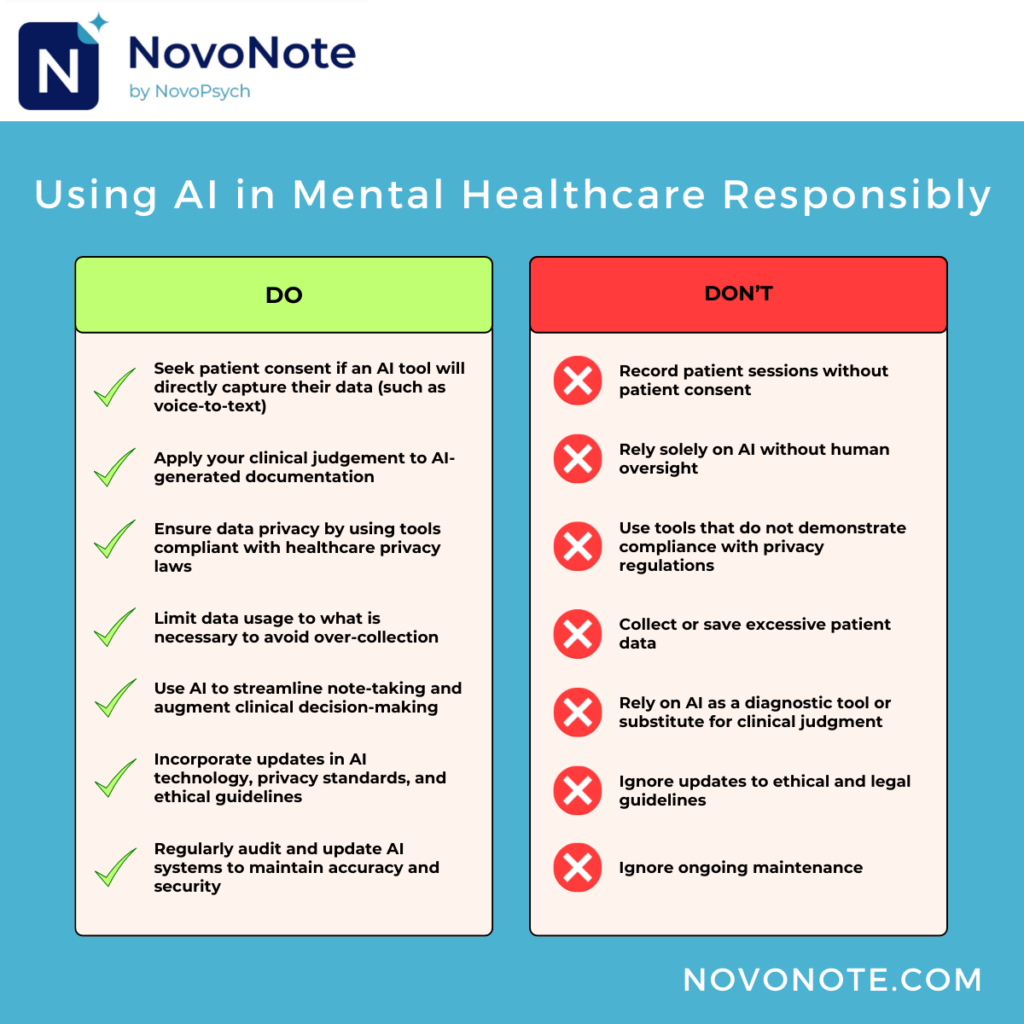

Below, we’ve outlined some practical tips and guiding principles for making the most of NovoNote while keeping ethical standards in mind. Think of these as your guidelines for using AI responsibly in your practice.

Understanding How NovoNote Works

NovoNote leverages cutting-edge Natural Language Processing (NLP) algorithms to assist healthcare professionals during patient consultations. The AI listens passively, capturing key details of the conversation, and then generates case notes that align with clinical standards.

Importantly, NovoNote is not intended to replace the clinical judgment of mental health professionals. The documentation created by NovoNote should be reviewed, edited, and finalised by the clinician before being saved to the patient’s file. Unlike manual note-taking, which can be time-consuming and prone to errors, NovoNote aims to increase both accuracy and efficiency.

Now, let’s take a closer look at these key considerations to help you safeguard yourself and your patients.

1 – Transparency with Patients and Informed Consent

Patients have a right to understand how their data is used, especially when an AI tool is capturing their data directly, such as in a voice-to-text context. We’ve made communicating with patients about AI easier with client fact sheets and explanatory statements found here. Be transparent – this fosters trust! It’s important not to use AI note-taking assistants if patients are uncomfortable or express concerns without addressing them first.

NovoNote is committed to transparency, and you can find detailed information about our strong privacy and security measures on our Security and Compliance page.

It’s important to be prepared to clearly explain to your patients the role of NovoNote in documentation, discuss data security and privacy, how their information is safeguarded, and answer any questions they might have. It’s essential you ask your patients for consent. This can be obtained verbally, by saying, “I’m using a microphone and automated note-taking assistant to help me document what we talk about in today’s session, is that ok?” and in writing using our consent form template.

DO NOT: Press the record button and hope that nobody finds out!

2 – Privacy and Confidentiality

AI tools process sensitive patient data, so healthcare professionals must ensure compliance with relevant privacy laws and protocols. When choosing an AI note-taking assistant, spend an extra couple of minutes checking that they are compliant with healthcare privacy laws (HIPAA, GDPR).

NovoNote is built by the NovoPsych team with privacy in mind. With a proven track record and established trust with over 75,000 clinicians for over a decade, we know how to implement the highest security standards to protect your patients’ information. NovoNote is compliant with HIPAA, GDPR, AHPRA, and the Australian Privacy Principles (APP), giving you peace of mind regarding data privacy. Protecting your patients’ information is our top priority.

DO NOT: Use an AI tool if you don’t know how they store and use your patients’ data!

3 – Clinical Oversight

AI tools are here to support clinical decision-making but don’t replace mental health professionals. Using NovoNote within its intended scope—as a documentation aid rather than a diagnostic or decision-making tool—ensures its appropriate and ethical application. Healthcare professionals must review and verify AI-generated notes to ensure accuracy, quality, and relevance, maintaining their role as the primary decision-makers in patient care. NovoNote provides powerful summaries, but a human touch ensures clinical soundness. Reviewing and editing notes before saving them ensures that they meet your high standards for accuracy and quality.

DO NOT: Copy and paste AI-generated notes into your patient record without reviewing them!

4 – Limit Data Usage

Limit the data collected to what is necessary for the specific task. NovoNote has been built to support you in achieving this. Audio recordings are never saved. Rather, audio is immediately converted into a redacted text-based transcript. Only summary notes are retained as part of the patient file, and transcripts are deleted by default.

DO NOT: Collect or save excessive patient data beyond documentation needs, which can increase security risks and regulatory concerns.

5 – Stay Within AI’s Role

As these tools gain popularity and their use increases, there is potential for healthcare professionals to over-rely on AI and pay less attention to clinical details, resulting in errors that impact the meaning of clinical information. When producing documentation, AI can not integrate information that is not explicitly discussed, such as your professional opinion, test results, history, discharge summaries, or non-verbal cues from the patient.

Use NovoNote to streamline note-taking and augment decision-making, not as a diagnostic tool or substitute for clinical skills or judgment. As with any clinical tool, the healthcare professional remains ultimately accountable for the quality of the documentation content and therefore liable for any errors.

DO NOT: Assume that AI has and can apply your expertise, knowledge and experience. You are irreplaceable!

6 – Ongoing Education

To use AI responsibly in healthcare, it’s important to remain abreast of the latest developments in privacy standards, ethical guidelines, and AI technology. The landscape of AI is rapidly evolving, and staying informed helps ensure that you’re using these tools in a way that aligns with current best practices and legal requirements. Regularly updating your knowledge will not only help you get the most out of tools like NovoNote but also ensure compliance with the latest regulations, safeguarding you and your patients.

By engaging in continuous professional development, whether through workshops, webinars, or industry publications, healthcare professionals can make informed decisions about how they integrate AI into their practice. This commitment to learning supports the safe, ethical, and effective use of AI technologies in clinical settings.

DO NOT: Fall into a “set and forget” mindset!

7 – Regularly Audit and Update AI Systems

Regularly update your AI tools to maintain accuracy and security. AI technologies are dynamic, meaning they require ongoing maintenance to stay effective and relevant. Outdated systems can introduce errors, biases, or security vulnerabilities that compromise both patient safety and data privacy. Regular audits can help identify these issues early, allowing for timely updates that align with the latest ethical guidelines and privacy laws. NovoNote benefits from continuous improvements and updates, helping you stay compliant with evolving privacy laws and ethical guidelines.

DO NOT: Ignore ongoing maintenance, as outdated systems can lead to errors and vulnerabilities!

Conclusion

Incorporating AI tools like NovoNote into your practice can greatly enhance efficiency, reduce administrative burdens, and allow you to focus more on patient care. However, using AI responsibly means upholding high standards of privacy, patient consent, and professional oversight. By adhering to these best practices, you can transform your practice while ensuring that you and your patients are safeguarded.